mpi example code

MPI and OpenMP Example. Parallel programs enable users to fully utilize the multi-node structure of supercomputing clusters.

Sample Of Assembly Code For Mpi Program Download Scientific Diagram

Star Code Revisions 3 Stars 6.

. Get the rank of the process int world_rank. Show hidden characters. The MPI interface consists of nearly two hundred functions but in general most codes use only a small subset of the functions.

The first code prints a simple message to the standard output. In the above code we first include the MPI library header mpihThen we call MPI_InitThis function must be called before any other MPI functions and is typically one of the first lines of an MPI-parallelized code. MPI is a directory of C programs which illustrate the use of the Message Passing Interface for parallel programming.

Using the velocity Verlet time integration scheme. To review open the file in an editor that reveals hidden Unicode characters. Below is the SLURM script we are using to run an MPI hello world program as a batch job.

MCS 572 MPI C F90 Source Code Examples. Observations To compute the uℓ1 values on time level ℓ1 we need two preceding time levels. Ice Lake Ice AND Lake Ice OR Lake Ice Quick Links.

Get the number of processes int world_size. Bones_mpic the source code. Non-blocking Message Passing Routines.

Using MPI with C. Instantly share code notes and snippets. The sections below feature an example Slurm script for our HPC resources show you.

Blocking Message Passing Routines. We are going to expand on collective communication routines even more in this lesson by going over MPI_Reduce and MPI_Allreduce. Using scasub with mpirun and mpimon parallel run commands on the UIC ACCC Argo Cluster.

Message Passing Interface MPI is a standard used to allow several different processors on a cluster to communicate with each other. MPI Message Passing Routine Arguments. SLURM scripts use variables to specify things like the number of nodes and cores used to execute your job estimated walltime for your job and which compute resources to use eg GPU vs.

Interact with a central pair potential. Learn more about bidirectional Unicode characters. MPI stands for message passing interface and is a message passing standard which is designed to work on a variety of parallel computing architectures.

Despite their appearance in the following example MPI_WIN_LOCK_ALL and MPI_WIN_UNLOCK_ALL are not collective calls but it is frequently useful to start shared access epochs to all processes from all other processes in a window. Some example MPI programs. Include include int mainint argc char argv Initialize the MPI environment MPI_InitNULL NULL.

ℓand ℓ1 We assume that the u0 and u1 values for all subscript iindices are given as initial conditions The main computation is a. Multiply Matrix with Dynamic task assignment. BUFFON_MPI demonstrates how parallel Monte Carlo processes can set up distinct random number streams.

Posted in code and tagged c MPI parallel-proecessing on Jul 13 2016 Some notes from the MPI course at EPCC Summer 2016. Star 6 Fork 0. BONES_MPI passes a vector of real data from one process to another.

View code READMEmd MPI. Bill Magro Kuck and Associates Inc. MPI status example.

The MPI standard defines how syntax and semantics of a library of routines. As a rule of thumb no other MPI functions should be called before the program calls MPI Init. MPI is the Message Passing Interface a standard and series of libraries for writing parallel programs to run on distributed memory computing systemsDistributed memory systems are essentially a series of network.

These are just examples that I used for learning. Examples of MPI programming p. Get the name of the processor char.

Gropp Lusk and Skjellum Using MPI. MPI and OpenMP Example. Contribute to hpcMPI-Examples development by creating an account on GitHub.

There are a number of implementations of this standard including OpenMPI MPICH and MS MPI. Below are a few small example MPI programs to illustrate how MPI can be used. Example The following example shows a generic loosely synchronous iterative code using fence synchronization.

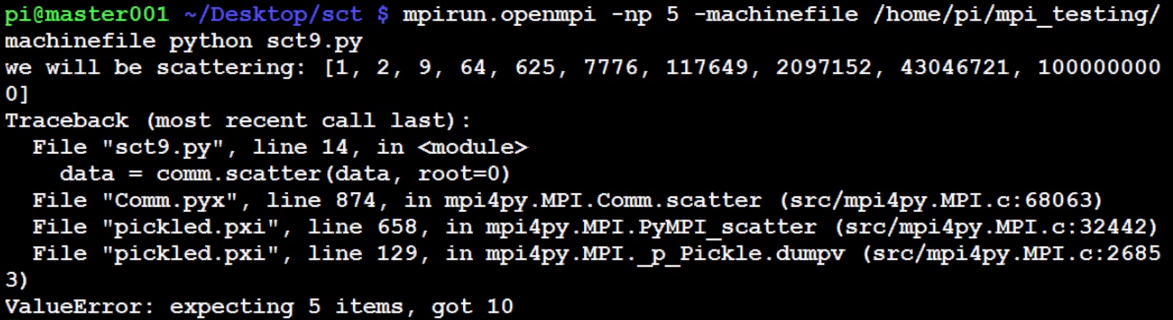

In the previous lesson we went over an application example of using MPI_Scatter and MPI_Gather to perform parallel rank computation with MPI. Using vmirun virtual machine parallel run command in batch job scripts on the NCSA Platinum Cluster. The call to MPI Init tells the MPI system to do all of the necessary setup.

Then we call MPI_Comm_size to get the number of processes in MPI_COMM_WORLD which corresponds to the number of processes launched whenever. Note - All of the code for this site is on GitHubThis tutorials code is under tutorialsmpi-reduce. Kaby Lake Special Operators.

Instantly share code notes and snippets. Last active Apr 4 2022. MPI - mpiexec vs mpirun.

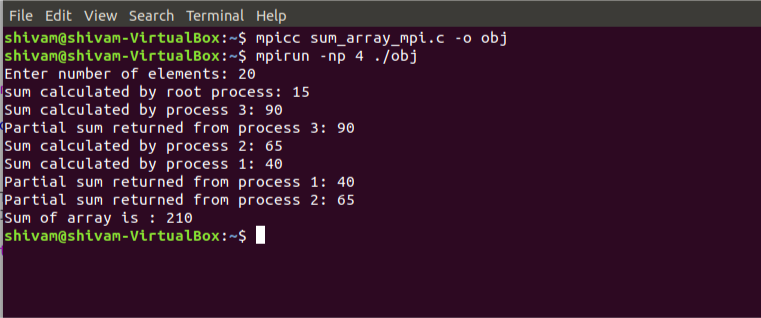

Convert the example program sumarray_mpi to use MPI_Scatter. The code below shows a common Fortran structure for including both master and slave segments in the parallel version of the example program just presented. This program implements a simple molecular dynamics simulation.

Parallelism is implemented via OpenMP directives. Examples and Tests. What would you like to do.

Mpi sample code Raw mpi_samplec This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. Message Passing Interface MPI is a standardized and portable message-passing standard designed by a group of researchers from academia and. Bones_outputtxt the output file.

Instantly share code notes and snippets. Projects written with MPI in Parallel. Group and Communicator Management Routines.

They are not production quality codes just use them for. Portable Parallel Programming with the Message Passing Interface MIT Press 1994. Using prun parallel run command in batch job scripts on the PSC TCS Cluster.

For example it might allocate storage for message buffers and it might decide which process gets which rank. MPI allows a user to write a program in a familiar language such as C C FORTRAN or Python and carry out a computation in parallel on an arbitrary number of cooperating computers. It was used as an example in an introductory MPI workshop.

In this tutorial we will be using the Intel C Compiler GCC IntelMPI and OpenMPI to.

One Sided Communication An Overview Sciencedirect Topics

Sample Of Assembly Code For Mpi Program Download Scientific Diagram

C Mpi Scatter Send Data To Processes Stack Overflow

Sum Of An Array Using Mpi Geeksforgeeks

Calculation Of Pi Using Monte Carlo Method And Mpi4py Module Download Scientific Diagram

5 An Example Of A Program Parallelized Using Mpi Download Scientific Diagram

Algorithm Cannot Transrcibe Pseudocode Into C Mpi For Hyksort Algo Stack Overflow

Message Passing Interface An Overview Sciencedirect Topics

C Mpi Scatter Send Data To Processes Stack Overflow

Cornell Virtual Workshop Example Multi Threaded Mpi Calls

Github Akkornel Mpi4py Example Of Using Mpi In Python With Mpi4py And Slurm

Sample Event Loop In The Conceptual Generated C Code Specialized For Download Scientific Diagram

Message Passing Interface An Overview Sciencedirect Topics

Writing Matlab Programs Using Mpi And Executing Them In Lomond

Comments

Post a Comment